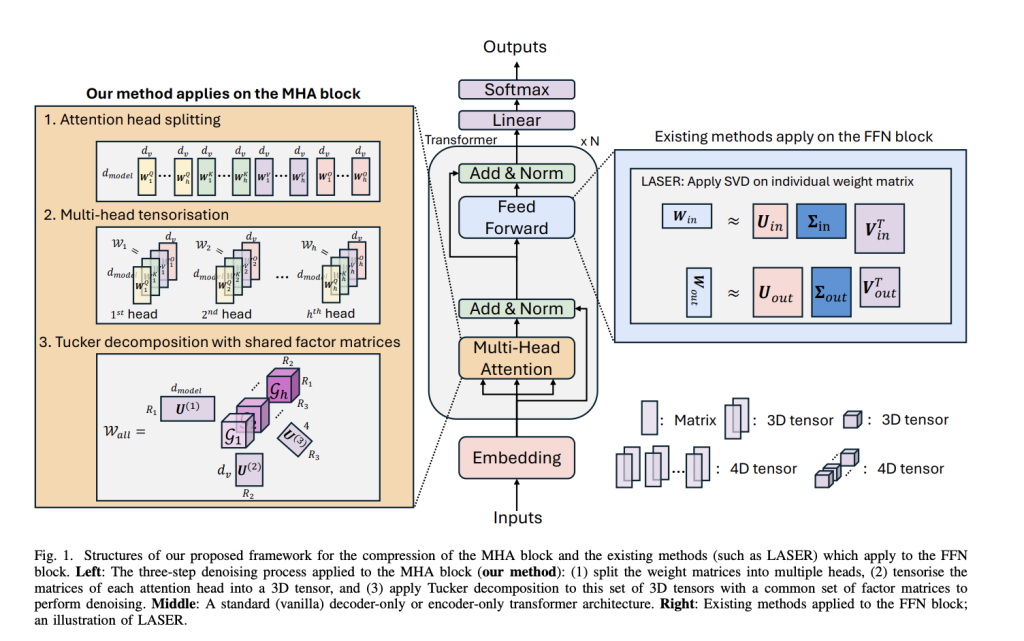

TensorLLM: Enhancing Reasoning and Efficiency in Large Language Models through Multi-Head Attention Compression and Tensorisation

LLMs based on transformer architectures, such as GPT and LLaMA series, have excelled in NLP tasks due to their extensive